An overwhelming majority of Australian voters are concerned over how AI technology could be used to spread misinformation via fake election ads created with synthetic footage.

Findings released by Adobe on June 7 suggest four out of five people want the use of AI banned after admitting they were struggling to determine misinformation from legitimate information.

Meanwhile, in a third of cases, people say they were partially disengaged from social media to avoid seeing fake ads or videos.

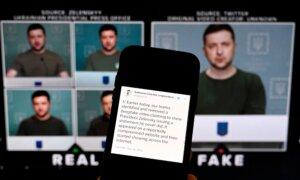

AI-driven misinformation delivered to social media containing deep fake technology is a growing concern globally, particularly in the context of elections.

Rapidly disseminating false information through social media and other digital platforms can significantly impact public opinion and democratic processes, according to the study.

For the “Future of Trust” Study, Adobe took survey responses from 1,005 people of voting age in Australia and New Zealand.

About 80 percent of respondents thought electoral candidates and parties should ban AI, while 78 percent thought the use of deep fakes posed “a risk to democracy.”

Adobe Asia Pacific government relations director, Jennifer Mulveny, said the survey highlighted how voters were wary over whether social-media-based electioneering could be trusted.

“(The study) underscores the importance of building media literacy among consumers, where they are not only alert to harmful deep fakes but have the tools to discern fact from fiction,” Ms. Mulveny said.

AI Use Unregulated

In 2020, a deep fake video released by conservative lobby group Advance Australia depicted the then-Queensland Premier Annastacia Palaszczuk giving a press conference that was evidently fake and captioned accordingly.

“One approach to mitigating this risk is amending the Act to require labelling of AI–generated content in addition to existing disclosure obligations of advertising and campaign materials.”

“If those messages were authorised, duly authorised, they do not fall afoul of the electoral act currently,” Mr. Rogers said.

The Australian Competition and Consumer Commission (ACCC) conducted an inquiry into misinformation from 2017-2022, and issued sector-specific reports that recommended regulatory reforms and various initiatives aimed at improving media literacy.Mr. Rogers said he would like to see regulations that clearly flag content as being AI-created.

“Digital watermarking could be a very important tool for us to be able to assist,” he said.

“AI is improving the quality of disinformation to make it more undetectable, and it is also then going to spread far more quickly, through multiple channels.”